Conversational User Interface, (or CUI in short) is, in layman’s terms, another way for you to interact, engage and immerse yourself in an array of digital products and services.

Let’s think of it as an extension of our current interactions arsenal. We’re all familiar with clicking, tapping and swiping our way around a screen as a means to an end, and CUI should allow us to achieve the same results, with less effort, simply by entering into a conversation… Or at least that’s the big idea. It might just be the monumental shakeup that a stagnating digital industry needs but is it for better or for worse, and should we embrace it?

In its current state, CUI presents itself in two forms, one of which relies solely on generating a spoken conversation between you and the interface, with no means or necessity to see anything visual. The second state consists of a combination of spoken word and graphical user interface based input, either in the form of a text chat based system (just like Facebook Messenger, or WhatsApp), or through suggested and predetermined cues that can be clicked or tapped in order to spur the interaction into its next stage. In either scenario, you’ve more than likely engaged with some form of CUI this week, whether you’ve realised it or not.

Let’s start on a positive.

I’ve become used to shouting ‘OK Google’ across my living room to initiate an official adjudicator in an argument that I might be having with my girlfriend. “Who played Andy Dufresne in The Shawshank redemption?”, “Are there plans to build a bridge from Russia to Alaska?”, “Why do planes that are flying to New York fly up towards Iceland?”. That’s it. There’s no further intervention required and just five seconds after initiating a conversation the problem is solved. There’s one happy party and more often than not, it’s her, not me.

Conversational UI in this sort of scenario serves its purpose brilliantly. I haven’t had to pick up my phone, swipe through my PIN, open my browser and search for an answer to my question. Even then I might have had to sift through several websites with paragraphs of text trying to find a definitive answer that is factual and to the point. In reality, I just wouldn’t have bothered and my question would have gone unanswered. The beauty is, I’ve purely and simply engaged in a conversation and received an audible, spoken response. Whether or not it is based on fact depends on how much trust you are willing to put into the technology but I’m going with it.

Reflecting on the experience as a user; it’s a win win. I’ve completely eradicated the cumbersome and somewhat unnecessary steps involved in finding the answer to my question manually. Less steps, quicker results, better experience. I haven’t had to lift a finger.

I hit a stumbling block.

The next question in my series; “where can I fly to from LBIA?”, yielded a somewhat different response and this is where I begin to question the practicality of CUI in certain contexts. Rather than spieling off a list of destinations from the other side of the room, I heard “these are the top results” and then silence. This is where the CUI ends and the GUI begins but is it a problem or a blessing?

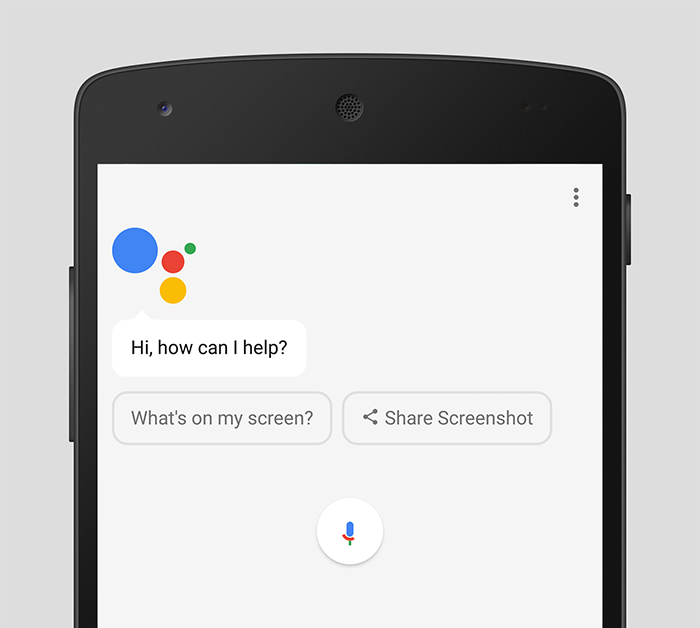

I picked up my phone and took a look at the screen. Google Assistant has presented me with an array of cards packed with information. I can swipe left to reveal more cards, or tap a button to be taken to a search results page. In either case the GUI has communicated a wealth of information to me at a glance. My initial reaction was that it would have been better for the CUI to read it out to me. But in reality, would I have listened to it and would it have made any sense? Probably not and it would most definitely have consumed much more time listening to an automated voice rambling through three or four different cards than it did for me to glimpse at my screen.

This is the point that design comes into the equation. What is the best possible scenario for the user, how do you determine what that is and how do you quantify it? It’s a difficult situation and inevitably it is going to be different from one user to the other. In my own mind, I would have liked Google Assistant to have read out a list of destinations. Whether or not they were airline specific or seasonal wouldn’t have really been an issue there and then. All I asked was “where can I fly?”. After answering the initial question I would have expected to be prompted with a question from Google Assistant. Something along the lines of “Would you like to book a flight?”, or “Would you like to know prices?” for example. From that point, I could have engaged in a deeper conversation to find exactly what I wanted and ultimately book the flight, or at least share some suggestions with my girlfriend.

In the case of Google Home, or Amazon Alexa where there is absolutely no GUI available, how would it have dealt with my request? I can only assume that I would have engaged in a fifteen minute long conversation in order to digest a tumult of information that might only have taken seconds on screen.

Arguably the cards presented to me on screen were not really relevant to my search either but they did present me with the opportunity to digest a considerable amount of information quickly and act upon it promptly. I think that this validates the case for a harmonious relationship between the two interfaces. If a majority of the heavy work can be done through meaningful conversation that gets to the results that I want, it makes sense to have a conversation. If I feel at any point during that conversation that I would like to take a look at something visual, I should be able to. After all a picture speaks a thousand words but I’d also like the opportunity to continue my conversation if I wish to do so.

So what’s next?

Although CUI is proving its worth for every day mundane tasks, it’s not without its issues and I definitely wouldn’t have the confidence to engage in a conversation for anything more than asking basic questions, or checking the weather. I can only imagine the it’ll get better as the big players enhance their machine learning techniques to create truly contextual and more realistic conversations. The two biggest obstacles in my mind are the limitations of spoken conversation and the issue of trust. How many times do you find yourself having to show somebody a diagram, or a picture, or a map to help explain something better?

Of course, spoken language is only really half of the conversation. Body language, facial expressions, stances and gestures are equally important in understanding exactly what it is that someone is trying to say. Can technology adapt to such an extent that CUI designers can utilise sensors to read and interpret such signals and develop a better and more realistic experience?

On the subject of trust, how do I know that I am really getting best best price for my flight? If I decide to have a straightforward conversation with Google Assistant and accept to book a flight that it suggests, how do I know whether or not that particular airline is paying Google to promote their services? Has Google Assistant effectively offered me a spoken advert? Do I have to ask Google to compare prices for the best deal? Arguably, the same problem still exists through a GUI; it is just a personal preconception that the information presented to me on screen is somehow more open and accurate than information which may be hidden behind layers of conversation and I shouldn’t really have to dig for it, nor do I want to.

Getting started.

So where do you begin in the world of Conversational UI design? Granted a new technology and an entirely new field for design is quite daunting. It’s also worth bearing in mind that just because it’s got ‘design’ in the title, it doesn’t necessarily mean that you need to pursue it. The first thing I realised once I started experimenting was that it’s probably more an opportunity for someone who has a deep understanding of language and human behaviour. None the less, gaining an understanding of the basics and making a start is surprisingly easy.

I have been experimenting with a ChatBot building service called ‘It’s Alive’. You can have a go for yourself at itsalive.io. It offers an intuitive and relatively straightforward insight into the logistics behind creating a conversational interface built on Facebook Messenger. You can publish the bot to your own site, test it, converse with it and share it with whoever you want, so give it a try and see what you can come up with.

In my next post, I’ll be talking about a CUI experiment that I’m working on for a future site concept. Can we scrap the conventional site navigation as we know it? We’ll see…

by tomw